The digital world keeps changing, and with it, new kinds of technology appear, some of which raise serious questions. One such area that has really caught people's attention, and caused a lot of worry, is the concept of a deepfake AI clothes remover. This is a very specific use of deepfake technology, which can create images or videos that look incredibly real, yet show something that never actually happened. It’s a topic that many people are talking about, and it's something we should all try to understand better, particularly as it touches on privacy and what is right.

To really get a handle on this, we first need to look at what deepfake technology is at its core. My text explains that deepfake technology can seamlessly stitch anyone in the world into a video or photo they never actually participated in. It's a type of artificial intelligence used to create convincing fake images, videos, and audio recordings. The term describes both the technology and the resulting fake media, which is rather interesting, you know.

A deepfake refers to a specific kind of synthetic media where a person in an image or video is swapped with another person's likeness, or where their appearance is changed in ways that seem very real. This includes images, videos, and audio, all generated by artificial intelligence (AI) technology that portrays something that does not exist in reality or. Deepfakes rely on deep learning, a branch of AI that mimics how humans recognize patterns, so it's almost like the computer is learning to see things in a human-like way. These AI models analyze thousands of images and videos of a person to learn their features, which is quite a process, actually.

Table of Contents

- What Exactly Are Deepfakes?

- The Rise of AI Clothing Manipulation

- How This Technology Works (A Simple Look)

- Serious Concerns and Ethical Dilemmas

- Protecting Yourself and Others

- The Future of Deepfake Technology

- Frequently Asked Questions

What Exactly Are Deepfakes?

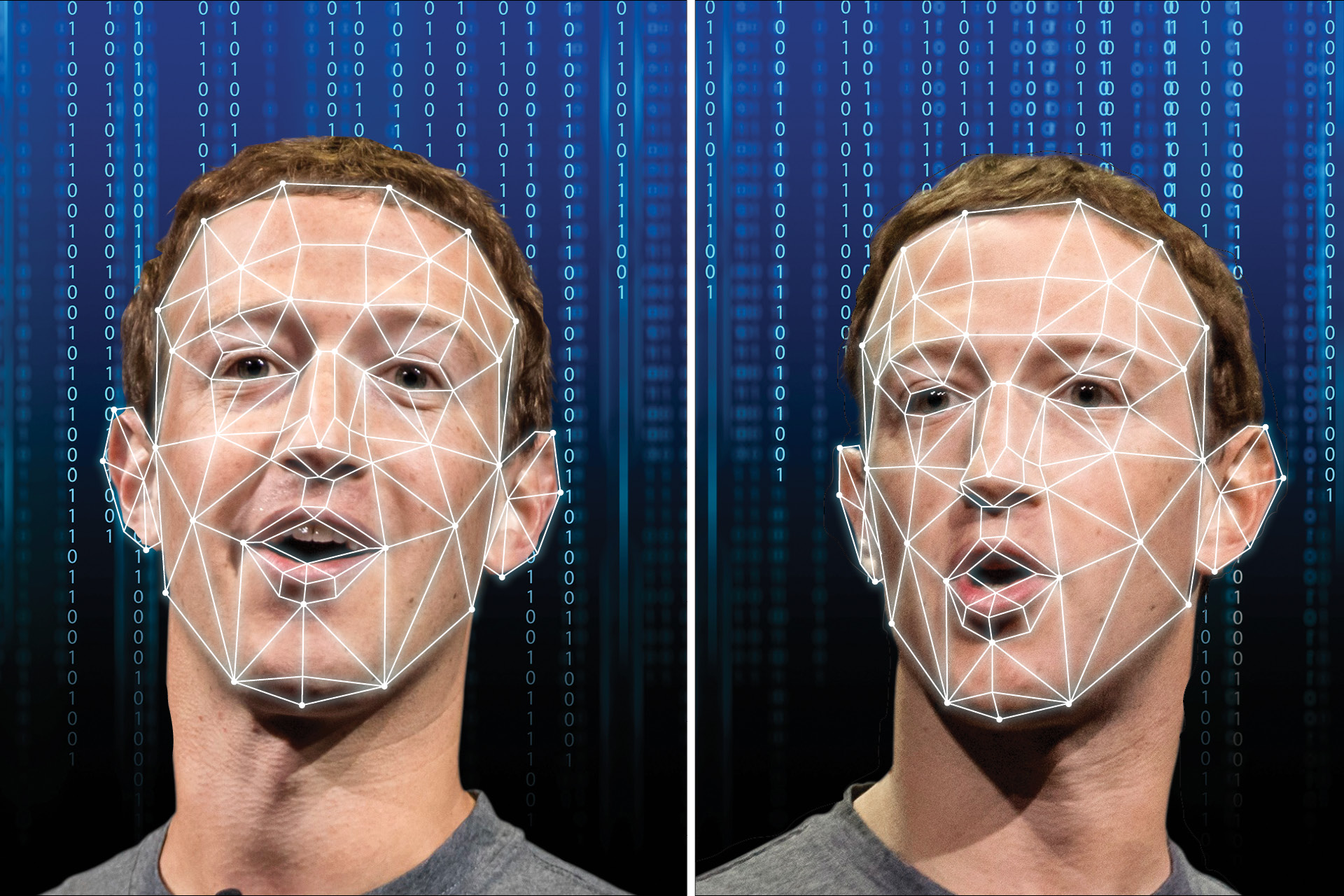

Let's take a moment to really think about what deepfakes are. As my text mentions, a deepfake refers to media that is digitally altered to replace a person's face or body with that of another. This means a video of someone could show them saying or doing things they never did, which is a bit unsettling, isn't it? Deepfakes are created using deep learning technology, hence the term being a. This deep learning is a part of AI that helps computers learn from lots of data, much like how a person learns from experience.

My text also points out that a deepfake is an elaborate form of synthetic media that uses AI and machine learning (ML) techniques to fabricate or manipulate audio, video, or images that appear convincingly real. This means the results can be so good that it's hard for the average person to tell what is real and what is not. In this module, you will learn about what deepfakes are, how they are made, and how to identify them. You will also discover the basics of how generative AI create images, audio, text, and, you know, all sorts of things.

The core idea behind deepfakes is to train a computer program on a vast amount of data, like pictures and videos of a particular person. The program then learns the person's facial expressions, their mannerisms, and even their voice patterns. Once it has learned enough, it can then create new, fake content that looks and sounds just like that person. It's really quite a clever trick of technology, if you think about it.

These AI models analyze thousands of images and videos of a person, looking for patterns. They pick up on how light hits their face, how their mouth moves when they speak, and all those little details that make someone unique. Then, they can apply those learned patterns to a different video or image, making it seem like the original person is there. It’s a process that requires a lot of computing power and a good amount of source material, actually.

The goal, in many cases, is to make something that fools the eye and ear, appearing as genuine as possible. This ability to create such convincing fake media is why deepfakes have become such a big topic of discussion, particularly when we talk about their less positive uses. It truly shows how far AI has come, doesn't it?

The Rise of AI Clothing Manipulation

Now, let's talk about the specific application that has caused a stir: the deepfake AI clothes remover. This refers to AI tools that can digitally alter images or videos to make it appear as though a person is wearing different clothes, or in some very concerning instances, no clothes at all. It's a rather alarming development for many people, especially when considering personal privacy and consent.

The ability to change what someone is wearing in a picture or video, without their permission, presents a lot of problems. It means that an image of anyone could be taken and then manipulated to show them in a way they never agreed to be seen. This raises serious questions about personal boundaries and digital safety, which is something we really need to think about.

This particular use of deepfake technology has gained attention because it directly impacts a person's image and dignity. Unlike some other deepfakes that might spread misinformation, this type often targets individuals directly, creating content that can cause significant harm. It’s a very personal form of digital manipulation, you know.

The existence of such tools means that anyone's photo could be at risk of being altered in this way. This makes people feel less secure about sharing images online, even with friends and family. It also puts a lot of pressure on social media platforms and other online services to find ways to detect and remove such harmful content, which is a big challenge for them.

It's important to remember that these tools are not magic; they are built on the same deep learning principles as other deepfakes. They learn patterns of clothing, body shapes, and how light interacts with different textures. Then, they apply these learned patterns to create the altered image. It's a very advanced form of digital editing, really.

The rapid growth of this specific deepfake application highlights a broader issue with AI: how do we ensure these powerful tools are used responsibly? It’s a question that many experts are trying to answer right now, and it's not an easy one. The technology itself is neutral, but its uses can be quite varied, some good, some not so good.

How This Technology Works (A Simple Look)

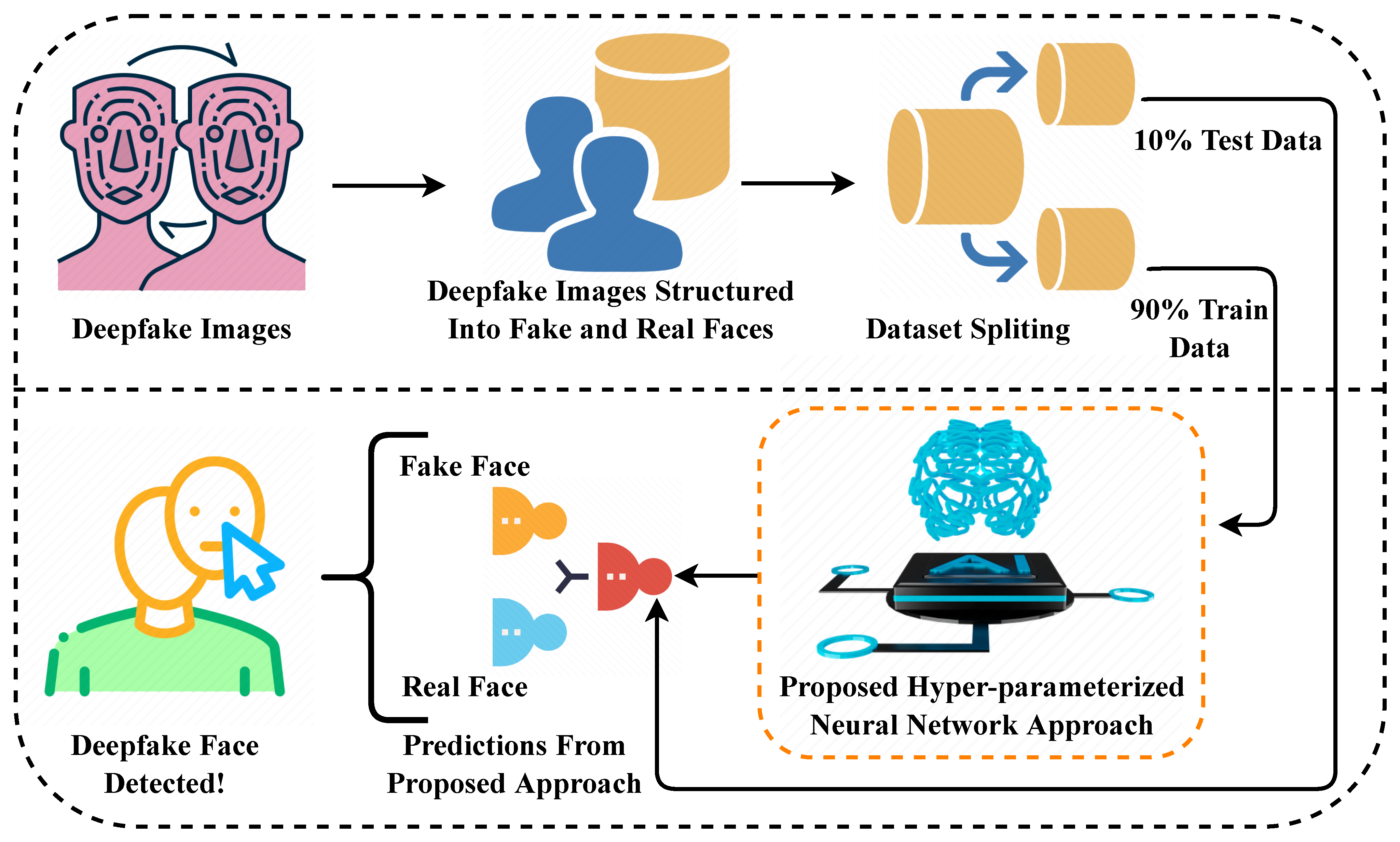

To get a basic idea of how a deepfake AI clothes remover works, think about how AI learns. My text explains that deepfakes rely on deep learning, a branch of AI that mimics how humans recognize patterns. These AI models analyze thousands of images and videos of a person. In the case of clothing manipulation, the AI is trained on many images of different types of clothing and how they look on various body shapes, and also, what bodies look like without clothes, which is quite a lot of data, as a matter of fact.

The AI essentially learns the "rules" of how clothes drape, how light creates shadows on fabric, and how different materials behave. It also learns how human bodies are shaped. Once it has absorbed all this information, it can then take an existing image or video of a person and, in a way, "paint" new clothing onto them, or remove existing clothing, making it look very natural. It's a complex process, but the outcome can appear very simple and real.

This process often involves something called a Generative Adversarial Network, or GAN for short. Imagine two parts of the AI working against each other. One part, the "generator," creates the fake image. The other part, the "discriminator," tries to figure out if the image is real or fake. They keep going back and forth, with the generator getting better and better at making convincing fakes, and the discriminator getting better at spotting them. This back-and-forth training is what makes the results so good, you know.

For a deepfake AI clothes remover, the generator would create an image where the person's clothes are altered or removed. The discriminator would then try to tell if that altered image looks real or if it's been tampered with. This constant feedback loop helps the AI refine its ability to create very believable, manipulated images. It’s a bit like an artist practicing their craft over and over again until they are masters, essentially.

The more data the AI has to learn from, the better and more realistic its creations become. This is why these tools can be so powerful and, at the same time, so concerning. The AI doesn't understand ethics or consent; it just follows its programming to create the most convincing image possible. This is why human oversight and strong ethical guidelines are so important, as I was saying.

The speed at which these tools can generate such content is also a factor. What used to take skilled graphic designers hours or days can now be done in moments by an AI. This accessibility means that the potential for misuse is much higher, which is a pretty serious consideration for everyone.

Serious Concerns and Ethical Dilemmas

The existence of deepfake AI clothes remover tools brings up some very serious concerns and ethical questions for society. Perhaps the biggest worry is the invasion of privacy. When someone's image can be altered without their knowledge or consent, it strips them of control over their own likeness. This is a fundamental right that many people feel very strongly about, you know.

Another major issue is the potential for harassment and abuse. Such manipulated images can be used to shame, blackmail, or otherwise harm individuals, particularly women and girls, who are often disproportionately targeted. This can lead to severe emotional distress, damage to reputation, and even real-world consequences for the victims. It's a truly awful thought, actually.

The spread of non-consensual intimate imagery, even if it's fake, is a form of digital violence. It can ruin lives and create a lasting sense of violation. Laws are still catching up to this new type of harm, which means victims often have limited recourse. This makes the situation even more difficult for those affected, as a matter of fact.

Beyond individual harm, there's a broader erosion of trust in digital media. If we can't tell what's real from what's fake, it becomes harder to believe anything we see online. This could have implications for journalism, legal evidence, and even our basic understanding of reality. It's a slippery slope, in a way, when truth becomes so easily manipulated.

There are also questions about the responsibility of the creators of these AI tools. While the technology itself may have legitimate uses, its potential for harm is undeniable. Should there be stricter regulations on who can access or develop such capabilities? These are complex questions that society is still grappling with, and there are no easy answers, naturally.

The ease with which these deepfakes can be shared across the internet means that once a manipulated image is out there, it's incredibly difficult to remove it completely. The internet has a long memory, and images can resurface years later, continuing to cause distress. This permanent digital footprint is a very troubling aspect of the problem.

We need to have conversations about digital ethics, consent in the age of AI, and how we protect individuals in a world where anyone's image can be digitally altered. It's a challenge that requires input from technologists, policymakers, and the public alike. We are all affected by this, after all, and need to find ways to deal with it.

Protecting Yourself and Others

Given the concerns surrounding deepfake AI clothes remover tools, it's important to think about how we can protect ourselves and others. One of the first steps is simply being aware that this technology exists and understanding its capabilities. Knowledge is power, as they say, and knowing what's possible helps us be more cautious online, you know.

For individuals, exercising caution about what photos and videos are shared publicly online is a good idea. The less material available for AI to train on, the harder it might be for someone to create a convincing deepfake of you. Consider your privacy settings on social media and who can see your content, which is a pretty basic step, really.

If you encounter a suspicious image or video, especially one that seems to show someone in an unusual or compromising situation, approach it with skepticism. Look for signs of manipulation, though these can be subtle. My text mentions that in this module, you will learn about what deepfakes are, how they are made, and how to identify them. This kind of learning is very useful for everyone.

Supporting and advocating for stronger laws and regulations against the non-consensual creation and sharing of deepfakes is also important. Many countries are starting to address this, but there's still a long way to go to ensure legal protections are in place for victims. This is a collective effort, and everyone's voice can make a difference, as a matter of fact.

Platforms also have a role to play. Social media companies and image hosting sites need to invest in better detection tools and have clear policies for removing harmful deepfakes quickly. They should also make it easy for users to report such content. It’s their responsibility to keep their users safe, you see.

Educating younger generations about digital literacy and the risks of deepfakes is vital. Teaching them to critically evaluate online content and understand the importance of consent can help prevent future harm. This includes discussions about what is acceptable to share and what is not, and the serious consequences of creating or spreading harmful content.

Finally, if you or someone you know becomes a victim of deepfake manipulation, seeking support is crucial. There are organizations and resources available that can help with legal advice, emotional support, and strategies for content removal. Remember, you are not alone, and help is available. You can learn more about digital safety on our site, and also find resources on online privacy.

The Future of Deepfake Technology

Looking ahead, the future of deepfake technology, including applications like the deepfake AI clothes remover, is likely to be a constant push and pull between creation and detection. As AI gets better at making convincing fakes, other AI tools are being developed to spot them. It's a bit like an arms race, where each side keeps getting more sophisticated, you know.

Researchers are working on new methods to watermark or authenticate digital media, making it easier to verify if an image or video is original and hasn't been tampered with. This could involve embedding invisible codes into content at the point of creation. It's a promising area of study, and could offer some real solutions, as a matter of fact.

There's also a growing focus on ethical AI development. This means building AI systems with safeguards in place to prevent misuse, or at least to make it harder. It involves considering the potential negative impacts of AI from the very beginning of its design. This is a big shift in how AI is approached by many developers, actually.

The legal landscape will continue to evolve as well. Governments around the world are becoming more aware of the dangers of deepfakes and are exploring ways to regulate their creation and distribution, particularly when they involve harm or non-consensual content. This could mean new laws, stricter penalties, and better ways to prosecute those who misuse the technology, which is pretty important.

Public awareness will also play a key role. The more people understand what deepfakes are and how they work, the less likely they are to be fooled by them. Continuous education and media literacy programs will be essential in helping everyone navigate the increasingly complex digital world. It’s about building a more informed society, basically.

While the deepfake AI clothes remover is a concerning application, it's part of a broader conversation about synthetic media. The same AI techniques can be used for positive things, like creating realistic avatars for virtual reality, helping with movie special effects, or even aiding in medical training. The challenge is to encourage the good uses while stopping the harmful ones, which is a big task, obviously.

The ongoing development of AI means that new capabilities will keep appearing. Staying informed and being part of the conversation about how these powerful tools are used is something we all need to do. It’s about shaping a digital future that respects privacy and promotes safety for everyone, which is a very worthy goal, you see.

Frequently Asked Questions

What is a deepfake?

A deepfake is a type of artificial intelligence used to create convincing fake images, videos, and audio recordings. My text says it refers to a specific kind of synthetic media where a person in an image or video is swapped with another person's likeness. It means something that looks real, but isn't, created by AI, you know.

How are deepfakes made?

Deepfakes are created using deep learning technology, a branch of AI that mimics how humans recognize patterns. These AI models analyze thousands of images and videos of a person, learning their features, and then use that knowledge to create new, manipulated content. It's a bit like teaching a computer to imitate someone perfectly, as a matter of fact.

What are the dangers of deepfake technology?

The dangers include invasions of privacy, the creation of non-consensual intimate imagery, harassment, and the spread of misinformation. Such tampered videos or audio recordings can be used for many harmful purposes, causing significant emotional distress and reputational damage to individuals. It really affects trust in what we see and hear online, which is a big problem, obviously.